After having put everything in place i launched the hadoop command :

hadoop jar wordcount.jar org.myorg.wordcount.WordCount /usr/joe/wordcount/input usr/joe/wordcount/output

and suprisingly, it kept hanging on map 0%, reduce 0%

I had to kill the virtual machine and reboot.

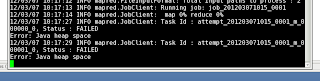

Next time I got the following output :

Hmm, so it seems Hadoop is not happy with the amount of heap space it can get.

After some trial and error, the solution was to increment the memory setting of the virtual machine. The Cloudera VM comes configured with 1Gbyte of memory. Changing this to 2Gbyte and rebooting solved the problem.

3 comments:

Thanks, this helped me fix my problem.

Hi,

very helpful content in your site the excellent trainers provides best training on

hadoop online training

with real time

Hi,

nice to share information and hadoop real time online training with real time experts on

hadoop online training

industry based projects

Post a Comment